In 2018, Clarifai made headlines for reasons it likely didn’t intend. The New York-based AI company, known for its work in computer vision, became a flashpoint in the debate over military AI ethics when it was revealed to be working with the Pentagon on Project Maven, a controversial program aimed at analyzing drone imagery with machine learning.

The company defended its role in the project as a way to reduce civilian casualties through greater targeting accuracy. But internal dissent followed. Several employees raised ethical concerns. Some resigned. Others accused the company of downplaying the project’s military nature and failing to report a security breach in a timely manner. Former director of marketing Liz O’Sullivan publicly criticized the company and eventually quit, stating she could not support its willingness to contribute to autonomous weapons systems.

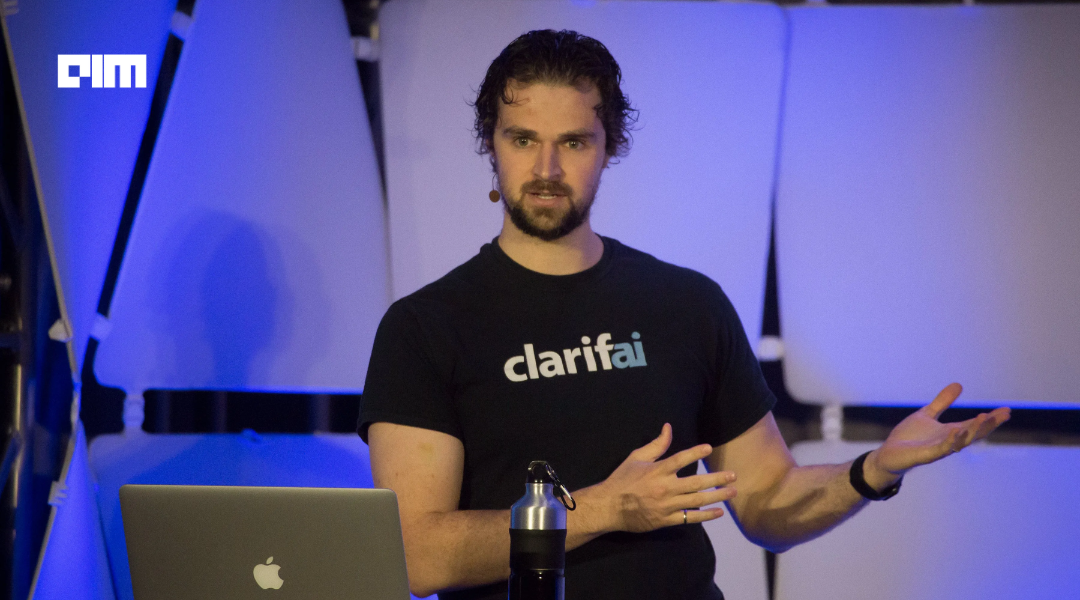

Clarifai founder and CEO Matt Zeiler told employees at the time that internal ethics officers were unsuitable for a startup and that AI would eventually be used to build more accurate, autonomous weapons. The fallout left Clarifai with a reputation for prioritizing growth and opportunity over ethics: an image that proved hard to shake.

Six years later, Clarifai is trying to shift that narrative.

This month, the company launched AI Runners, a tool that allows developers and MLOps teams to deploy AI models locally or on private servers while still tapping into Clarifai’s cloud-based orchestration and APIs. The company says the tool is designed to meet the increasing demands of agentic AI systems, which require flexible compute and secure deployment across varied environments.

Clarifai’s Strategy Shift Is Noticeable

The AI Runners product marks an evolution in Clarifai’s strategy. The company now emphasizes infrastructure, developer tooling, and orchestration over headline-grabbing applications of AI. Instead of building battlefield tools, Clarifai wants to help others manage AI workloads securely and efficiently, offering what it describes as a vendor-agnostic, scalable platform that integrates with both cloud and on-premise infrastructure.

Alfredo Ramos, Clarifai’s Chief Product and Technology Officer, compared AI Runners to “ngrok for AI models,” referring to a tool that tunnels local environments to the internet. The pitch is that users can keep proprietary models and data on their own machines while still benefiting from scalable APIs, autoscaling, and monitoring via Clarifai’s platform.

Whether this technical differentiation will hold is unclear. Several vendors, including open-source communities and cloud providers, offer secure local deployment options. Clarifai says it is the only platform to offer this level of flexible integration with a managed API, but the claim is difficult to independently verify.

Clarifai is not the only company attempting to position itself as AI infrastructure. Open-source projects like Hugging Face’s Transformers and Text Generation Inference allow organizations to run models locally with community support. Scale AI has focused more on training and data labeling, while Clarifai emphasizes deployment flexibility and infrastructure control, which may appeal to organizations with strict governance needs.

The launch builds on the company’s December 2024 release of a compute orchestration platform for managing GPU workloads across public cloud and on-premise hardware. Taken together, the announcements point to a broader shift toward serving as foundational infrastructure rather than a provider of AI capabilities.

Defense Partnerships Continue

Despite its shift in messaging, Clarifai has not stepped away from government and defense engagements. In October 2024, the company partnered with Crimson Phoenix to expand AI and data labeling capabilities for defense and intelligence communities, including U.S. Army initiatives like Autonomous Combat Casualty Care. By June 2024, Clarifai had been designated “awardable” on the Department of Defense’s Tradewinds Solutions Marketplace, a formal recognition that the company meets key requirements for federal AI and ML contracts.

These developments suggest that Clarifai’s repositioning is not a retreat from government work. The company now emphasizes its role as infrastructure provider while continuing to pursue defense applications, albeit through more institutional channels.

Ethics in AI, Revisited

Clarifai’s repositioning comes at a time when the boundaries around “ethical” AI have grown more ambiguous. Since 2023, Google has reversed its previous ban on weaponized AI systems, now permitting work in national security under certain conditions. OpenAI, originally a nonprofit focused on “benefiting humanity,” recently signed a $200 million contract with the U.S. military and removed its cap on profit distributions.

Clarifai’s earlier controversy over Project Maven might no longer trigger the same backlash. Silicon Valley’s reluctance to engage with the defense sector has eroded, replaced by arguments that AI is essential to national security and that the U.S. cannot afford to fall behind rivals like China.

What’s clear is that Clarifai has largely moved away from public discussions of ethics. There’s no mention of internal oversight mechanisms or governance structures in recent announcements. The focus is on technical performance, deployment flexibility, and developer usability. That may be a strategic choice. Ethics, for now, remain difficult to market unless paired with clear policy frameworks.