The software industry is producing code at an unprecedented pace, much of it thanks to generative AI. But this acceleration is accompanied by a sharp rise in security vulnerabilities, and many companies aren’t adapting fast enough. In a recent episode of Bloomberg Intelligence’s Tech Disruptors Podcast, Snyk CEO Peter McKay offered a grounded assessment of where cybersecurity is falling short and what organizations need to change if they want to stay protected.

“We’re in probably the most insecure phase of software development ever,” McKay said. According to him, developers are now writing 20 to 30 percent more code than before, but the output is 30 to 40 percent more vulnerable. AI coding assistants like GitHub Copilot, Windsurf, and Cursor, which generate code by learning from public repositories, are compounding the problem by reproducing outdated or insecure patterns. Developers may not understand or review what’s generated, and attackers are noticing.

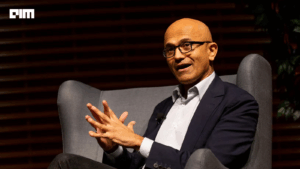

The scale of the shift is substantial. Microsoft CEO Satya Nadella recently said AI is responsible for 30 percent of code in some company repositories, a figure expected to rise. While productivity gains are clear, McKay argues that the rapid adoption of generative tools has “put a strain on security teams trying to keep up.” The result is a widening gap between how fast code is written and how effectively it can be secured.

An Expanding Attack Surface and the Risk of Complacency

Beyond old vulnerabilities resurfacing in greater numbers, AI-native software is introducing new and poorly understood attack vectors. McKay emphasized that with the rise of agent-based architectures businesses are entering a new era of risk. “If I have a thousand of these agents and I have agents talking to agents… there’s a lot of damage that could come if someone was able to take over one of these,” he warned.

Attacks such as prompt injection and data poisoning, once largely theoretical, are beginning to appear in the wild. Prompt injection occurs when an attacker subtly manipulates input prompts to override an AI system’s intended behavior. Data poisoning, meanwhile, targets AI models during training by introducing misleading or malicious data, compromising the integrity of the entire system. McKay drew parallels to the early days of the cloud transition, when moving from on-premise infrastructure to hosted environments opened up new security gaps. The difference now, he suggested, is that the speed of change is even more destabilizing, and few companies are designing security in from the outset.

Despite mounting risks, most organizations continue to treat security as an afterthought. “The more sophisticated companies are thinking about building security by default from the beginning,” McKay said, but he acknowledged that this mindset is not yet widespread. Many businesses are still prioritizing AI-driven productivity gains without considering the long-term consequences of insecure code. According to McKay, this leaves them dangerously exposed to what he sees as an inevitable reckoning.

“We’re probably six months away from seeing a real AI cyber attack,” he predicted. By that, he meant a large-scale incident that originates from vulnerabilities in AI-generated or agent-based applications: an event comparable in impact to the SolarWinds or Log4j breaches. If and when that happens, McKay expects the industry’s attitude toward AI security will change overnight.

A Push for Proactive, Embedded Security

For McKay, the only sustainable solution is to integrate security throughout the software development lifecycle. His prescription aligns with the principles of DevSecOps, but with an emphasis on speed and automation. Rather than waiting for code to be audited at the end of the cycle, security tools should be embedded directly into developers’ workflows: flagging and remediating issues in real time, ideally as the code is written.

However, these tools are not foolproof. Experts caution that AI-generated code must still be reviewed with the same scrutiny as human-written code. LLMs are trained on public datasets, which can include outdated or vulnerable patterns. Without careful oversight, developers may assume generated code is secure simply because it compiles or appears to work. That false confidence introduces risk, especially when intellectual property, user data, or business-critical logic is involved.

McKay acknowledged that security platforms must adapt as the definition of “developer” evolves. Increasingly, AI tools are enabling non-engineers, from marketers to finance teams, to write applications, further broadening the attack surface. Ensuring these new users have guardrails in place is just as important as securing the work of professional engineers.

With dozens of tools targeting overlapping aspects of the software lifecycle. McKay contends that consolidation is inevitable and necessary. He argued that platforms must offer both breadth and deep integration to be effective. Whether this strategy proves more sustainable than the point-solution model remains to be seen, particularly as competitors like Palo Alto Networks and Wiz pursue their own acquisition-heavy expansion strategies.