When DeepMind’s Genie 3 hit the public eye, the industry did what it always does with a dazzling demo: it gasped, debated whether we were suddenly a few lines of code away from a Holodeck, then immediately began asking the harder question no one wants to ignore: does this convince businesses who actually have to ship products? Demis Hassabis, DeepMind’s CEO, framed the work in exactly the register that amplifies the awe: “We want to build what we call a world model, which is a model that actually understands the physics of the world,” he said, arguing that generating the world back again is a litmus test for a model’s depth.

That claim is intoxicating because Genie 3 does something earlier world models couldn’t. From a text prompt it spins up a 720p, 24fps 3D scene that you can walk through; it remembers where you left the paint on the wall; it lets you change the weather and watch events unfold. DeepMind’s researchers call this emergent consistency: a memory that simply appeared from training rather than from hard-coded rules, and for cognitive scientists and dreamy product thinkers it reads like proof that AI is learning to “think” about the physical world. “That part of the world is the same as you left it, which is mind-blowing, really,” Hassabis told the conversation in the file.

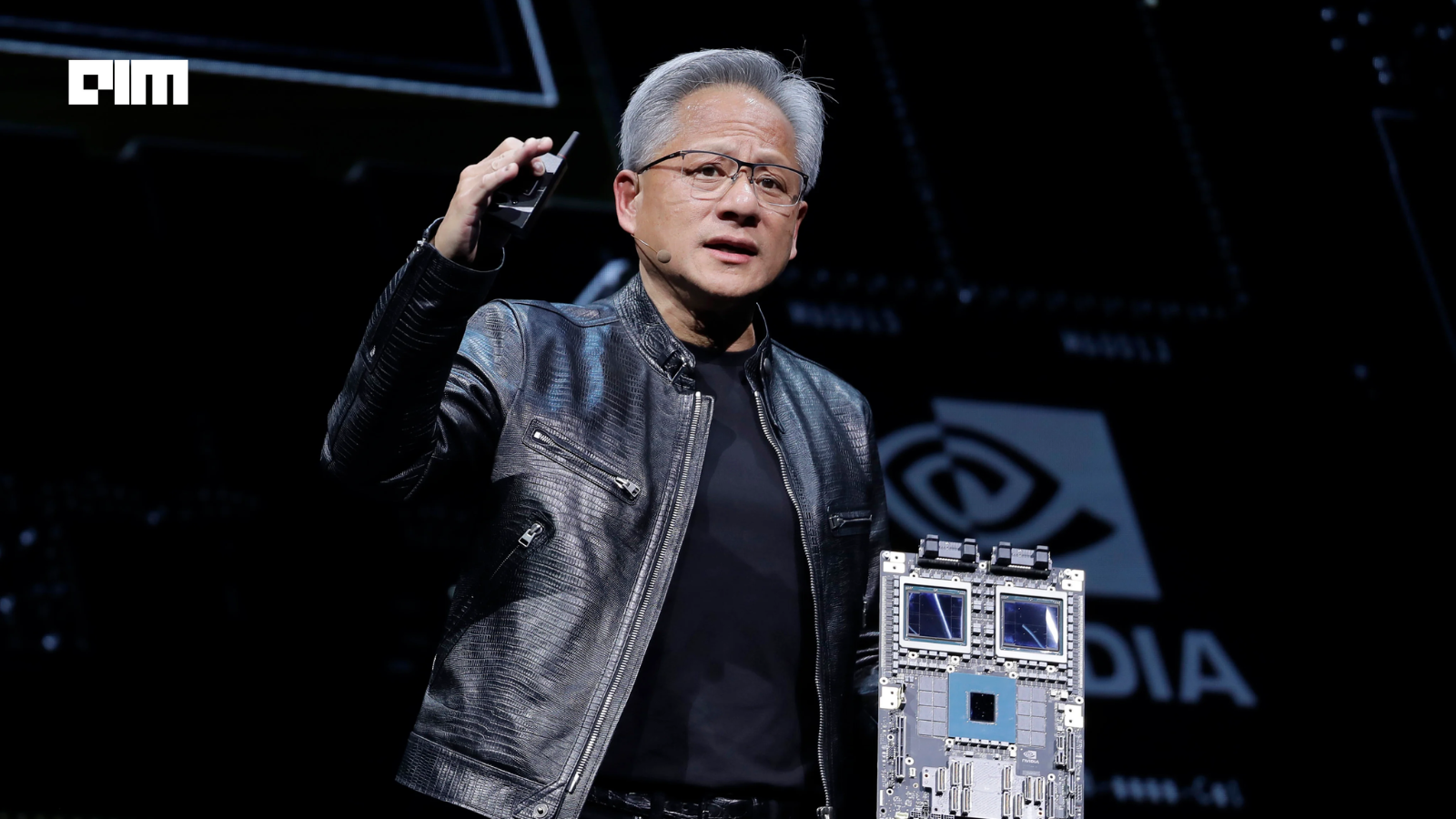

But business leaders don’t buy wonder; they buy outcomes, predictability and a way to make the numbers add up. That’s why NVIDIA’s response last week, not a single model but an industrial stack, looks a more consequential product announcement for companies planning to put robots and autonomy into factories, stores and fleets. At SIGGRAPH NVIDIA rolled out not just new models but new libraries, neural reconstruction tools, integrations with OpenUSD and explicit pipelines for synthetic data and physics-aware rendering. The kind of plumbing that turns an experiment into a repeatable deployment. As Rev Lebaredian, NVIDIA’s Omniverse head, put it: “By combining AI reasoning with scalable, physically accurate simulation, we’re enabling developers to build tomorrow’s robots and autonomous vehicles that will transform trillions of dollars in industries.”

Genie 3 bundles generation and perception in one elegant package: it creates the world and, at the same time, models that world’s future. That elegance is also its weakness. Auto-regressive generation accumulates error; the model’s action space is currently constrained; and its memory horizon is measured in minutes, not the multi-hour or multi-day simulations real training curricula require. DeepMind is candid about these limits: the demos sometimes botch fine details like snow dynamics, and the model only supports a few minutes of continuous interaction, and the company stresses responsibility as they scale the tech.

NVIDIA’s idea is the opposite: break the job into auditable parts. Capture a scene with neural reconstruction into an OpenUSD representation, run deterministic physics and ray-traced rendering where fidelity matters, and use distilled world models where variability and scale are required. The company showed how a distilled Cosmos Transfer can collapse a 70-step process into a one-step flow for RTX servers, and it positioned Cosmos Reason: a 7B reasoning VLM, as the brain that can turn synthetic data into planning and annotation primitives for real robots. Those are exactly the primitives engineering teams say they need: labeled, reproducible datasets; deterministic simulators; and a path from prototype to production.

So what does the boardroom-careful executive take from the spectacle? First, that Genie 3 matters, not because it will immediately run your warehouse, but because it redraws the boundary of possibility. Hassabis’s point that world models are “a stepping stone on the path to AGI” is not marketing hyperbole; it is a sober statement about capability trajectories. Second, that the practical work of turning imagination into serviceable autonomy is brittle and mundane: data pipelines, simulation fidelity, interoperability standards and predictable compute costs. NVIDIA’s playbook looks mundane because the hard commercial problems are mundane.

That tension (spectacle versus stack) will shape purchasing decisions for the next three to five years. Early adopters in autonomous vehicles, logistics and manufacturing will experiment with generative worlds for rapid ideation and scenario diversification, but they will rely on modular, auditable stacks to certify behavior, debug failures and meet regulatory demands. The real business test isn’t whether an AI looks real for a minute; it’s whether it helps reduce downtime, shrink annotation costs, or lower injury risk across millions of hours of operation.

If there is a likely ending to this story, it is one of convergence rather than annihilation. Genie-style models will borrow structured outputs to plug into USD pipelines; platform vendors will ingest neural advances into distilled, production-grade flows. The companies that win will be those that can hold both truths: they can imagine new worlds with a generative model and, crucially, turn that imagination into repeatable, auditable systems that pay the bills. For leaders, the practical implication is this: you must fund both the future and the plumbing.