Millions of people are turning to chatbots for everything from news summaries to mental health advice. Former CNN anchor and Meta executive Campbell Brown is building a company to ensure those systems get it right.

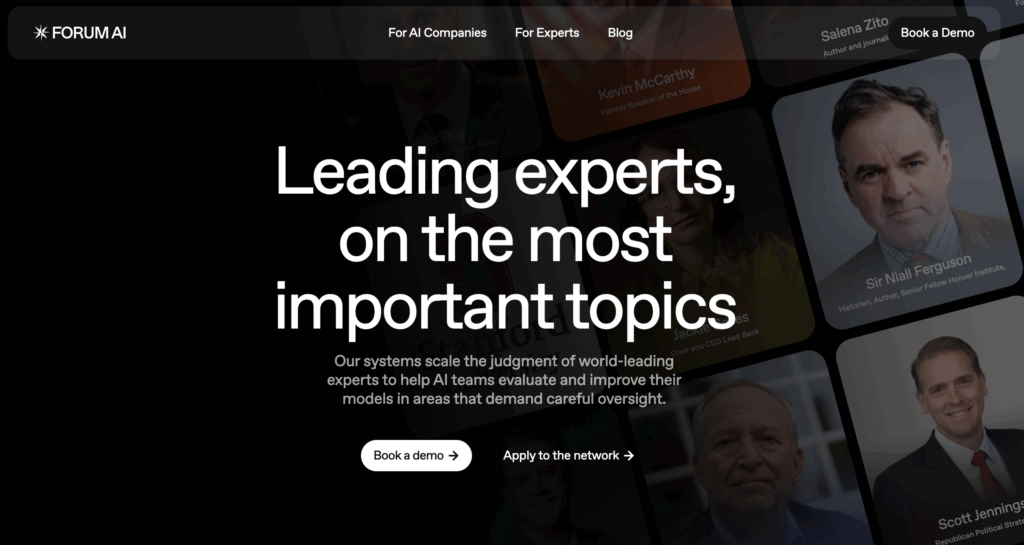

Forum AI, co-founded with Robbie Goldfarb, who also worked at Meta on AI trust and safety, has raised 3 million dollars in seed funding led by Lerer Hippeau with participation from Perplexity AI’s venture fund. The company evaluates how large language models such as ChatGPT, Claude, and Gemini handle complex subjects including politics, foreign affairs, economics, and mental health.

“AI is already shaping how people understand the world,” Brown said. “We built Forum AI to make sure these systems reflect human expert judgment, not just statistical accuracy.”

New Approach to Evaluating AI

Forum AI’s work begins with assessing how models respond to subjective and high-stakes questions. When chatbots address topics like inflation, geopolitical conflicts, or mental health, the company’s network of experts evaluates whether responses demonstrate appropriate tone, balance, and context while identifying missing perspectives and hidden bias.

The network includes figures such as Larry Summers, Kevin McCarthy, Fareed Zakaria, Niall Ferguson, Van Jones, Scott Jennings, Salena Zito, and Rob Kee, among others. Institutional partners include the Mount Sinai Health System, Cleveland Clinic, the Stanford Institute for Human Centered AI, the Manhattan Institute, and the Atlantic Council.

These experts represent fields such as economics, politics, intelligence, foreign policy, and healthcare. Many have worked in government, academia, or journalism and bring decades of real-world experience to assessing how AI systems communicate information.

Brown and Goldfarb describe their mission as building the infrastructure needed to make AI trustworthy on questions where judgment matters as much as data. “The systems being built today lack the judgment to handle complex scenarios reliably,” they wrote. “AI tools are already explaining the news, educating students, and offering mental health support, yet users rarely know how those answers are formed or what context is missing.”

Expert Review as a Human Layer

Forum AI uses a process similar to peer review in academic publishing. Experts from its network evaluate AI outputs, note missing context, and suggest improvements. When gaps appear consistently across models, they create new content or provide real-time analysis as events unfold. An economist might highlight historical patterns in market movements, or a foreign policy expert might explain regional dynamics behind diplomatic developments.

The company began with politics and world affairs before expanding into healthcare. Conversations with major AI developers revealed growing concern about how chatbots respond to users seeking emotional or medical advice. “When teenagers ask chatbots mental health questions, the tone of the response really matters,” Brown said. “It has to be handled with care.”

Forum AI now works with Cleveland Clinic and Mount Sinai to evaluate how chatbots answer sensitive mental health queries and to provide accurate and compassionate content when needed. Many of the organization’s partners are nonprofits that see AI as a natural extension of their mission to ensure people have access to reliable information.

Lessons from Meta

Brown’s experience at Meta shaped her perspective on the need for oversight. As head of news and media partnerships, she oversaw fact-checking programs and the launch of Facebook News. She said those efforts showed how difficult it is to balance scale, accuracy, and public trust. “Even well-intentioned systems can fail to deliver necessary context and can reinforce hidden bias,” Brown said. “AI could make these problems exponentially worse, not because it is inherently biased, but because it is opaque.”

Goldfarb’s background in AI safety also informed the company’s structure. Both founders saw how recommendation algorithms and AI systems determine what billions of people see each day. Their work at Meta convinced them that future systems must integrate expert review before problems escalate.

The idea for Forum AI also came from Brown’s personal life. Watching her teenage children use AI chatbots for homework and information sparked concern about how much trust people place in algorithmic answers. “Political bias misses the deeper issue, which is transparency,” she wrote in a company post. “An AI answer about the economy, healthcare, or politics sounds authoritative. Even when sources are shown, people rarely check them. Users trust the AI’s synthesis without examining the material behind it.”

A Collaborative Model for AI Reliability

Forum AI operates behind the scenes as a consumer product. It provides independent evaluations to model developers and research partners seeking to understand how their systems perform under scrutiny. The company’s work aligns with a broader push for accountability as governments in the United States and Europe explore regulations for AI transparency and bias mitigation.

For Brown, the goal is not to determine what is right or wrong but to ensure that diverse perspectives and qualified expertise are reflected in AI outputs. “Many issues are not simply left or right,” she said. “We are trying to help AI capture nuance.”

The company’s model is designed to complement, not replace, media or research institutions. “When people talk about AI partnerships today, they often mean licensing deals or data agreements,” Brown said. “Forum AI is meant to support those efforts by ensuring that when content from experts or journalists is used by AI systems, it retains balance and accuracy.”

Brown sees Forum AI as a response to the growing role of AI in shaping public understanding. “We watched algorithms determine what billions saw,” she said. “Now we are watching AI systems become the next gateway to information. The same challenges are emerging, and this time we can address them earlier.”

The company’s mission is to make sure that when people ask AI about the economy, global conflict, or mental health, the answers reflect informed human judgment. “Getting it wrong on issues like war, health, or the economy has real consequences,” Brown said. “Our work is about making sure the information people receive from AI carries the depth of expertise these subjects deserve.”