Luminal has closed a $5.3 million seed round to build a new GPU programming framework aimed at tackling the software bottlenecks facing modern AI workloads.

The round was led by Felicis Ventures, with participation from prominent angel investors including Paul Graham, Guillermo Rauch, and Ben Porterfield.

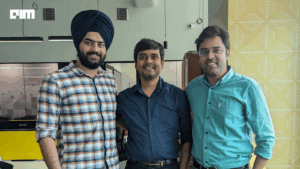

The company was founded by Joe Fioti, along with Jake Stevens (formerly at Apple) and Matthew Gunton (formerly at Amazon). As AI models scale in size and complexity, Luminal argues that raw GPU hardware is not enough, engineers repeatedly rebuild low-level GPU code paths, leading to fragmentation, poor reproducibility, and hard-to-debug performance issues. This funding is aimed at building a unified software layer to simplify and standardize GPU usage for AI teams.

The Fragmented World of GPU Engineering

GPU programming inside AI companies is rarely straightforward. Engineers are often forced to choose between speed and stability, with most workflows landing somewhere in the middle. Fioti saw this tension clearly during his years working on chip design, where the limits of the software stack became impossible to ignore.

As he put it, “You can make the best hardware on earth, but if it’s hard for developers to use, they’re just not going to use it.” Even well-funded teams maintain collections of custom kernels, patches, and one-off optimizations written under tight deadlines.

This repeated rebuilding of GPU code paths leads to three persistent issues. First, reproducibility suffers, as the same model can behave differently depending on the environment or the specific kernel version used. Second, debugging becomes slow, as GPU errors rarely surface with clear traces. Third, development cycles slow down because teams must revisit the same low-level problems for every new model variant. The absence of a reliable, shared framework leaves companies with long-term maintenance burdens that grow as workloads scale.

How Luminal’s Framework Works

Luminal approaches this problem by constructing a unified compiler and runtime layer that sits directly between machine-learning frameworks and GPU hardware. Instead of requiring engineers to hand-craft kernels or adjust low-level functions every time they ship a new model, Luminal automates this step. The system rewrites computation graphs, generates a large set of candidate kernels, and then benchmarks those kernels to identify the fastest, most stable option.

A key part of Luminal’s approach is a structured kernel search process that produces results that behave the same way across runs. This directly addresses reproducibility challenges that plague many current GPU workflows. The framework also gives developers a clear view of the selected kernels and performance paths, making it easier to diagnose regressions and understand how a model interacts with the hardware.

Luminal’s integration with PyTorch allows teams to move a model from experimentation to production with fewer friction points. By producing predictable kernel output and a cleaner debugging path, the company aims to remove the constant rework that typically accompanies scaling AI systems. The new funding will support deeper product development, expansion of GPU workload coverage, and new engineering hires to strengthen the framework.

What Makes It Stronger Than the Alternatives

The GPU-compiler space already includes widely used tools, yet many of them create practical challenges for teams building or serving large AI models. XLA has broad adoption across major frameworks and can target several hardware types, but it operates through strict operator patterns and deeper abstraction layers. This structure makes performance behavior less transparent and can limit flexibility when teams experiment with new architectures or tailor custom kernels. Luminal addresses this by providing clearer visibility into kernel generation and by keeping results stable even as workloads shift.

TVM has a strong foundation in compiler research and is capable of producing high-performance kernels. Its tuning cycle, though, can be lengthy, requiring extended search sessions to reach a usable schedule. As a result, reproducibility across builds becomes harder to maintain. Luminal avoids these issues by embedding its search process directly into the system, producing consistent output without long tuning phases. This helps teams maintain stable performance across versions without investing additional engineering time.

Some organizations still rely on proprietary setups such as custom CUDA kernels. While these paths can be fast, they impose continuous maintenance as model shapes and hardware generations shift. Luminal offers a single framework that behaves consistently across changing workloads without forcing engineers to rebuild kernels repeatedly. For companies training or serving large AI models, this reduces exposure to performance drift and operational cost swings.

Fioti said “It is always going to be possible to spend six months hand tuning a model architecture on a given hardware… but anything short of that, the all-purpose use case is still very economically valuable.”

Positioning Luminal in the AI-Infrastructure Market

Luminal’s seed round places the company in a clear and focused segment of AI infrastructure: the software layer responsible for translating models into the GPU instructions that determine speed, reliability, and operational cost. While many young companies compete on raw compute supply, Luminal addresses the root problem of how developers actually harness the hardware they already have.

The funding gives the company the ability to deepen its compiler systems, support more workload types, and strengthen its engineering bench at a time when AI development is constrained not only by GPU availability but by the quality of software that controls those GPUs.

By centering its product on reproducibility, predictable performance, and a cleaner development experience, Luminal is building the kind of groundwork that teams often lack as they scale from early prototypes to production-level models.