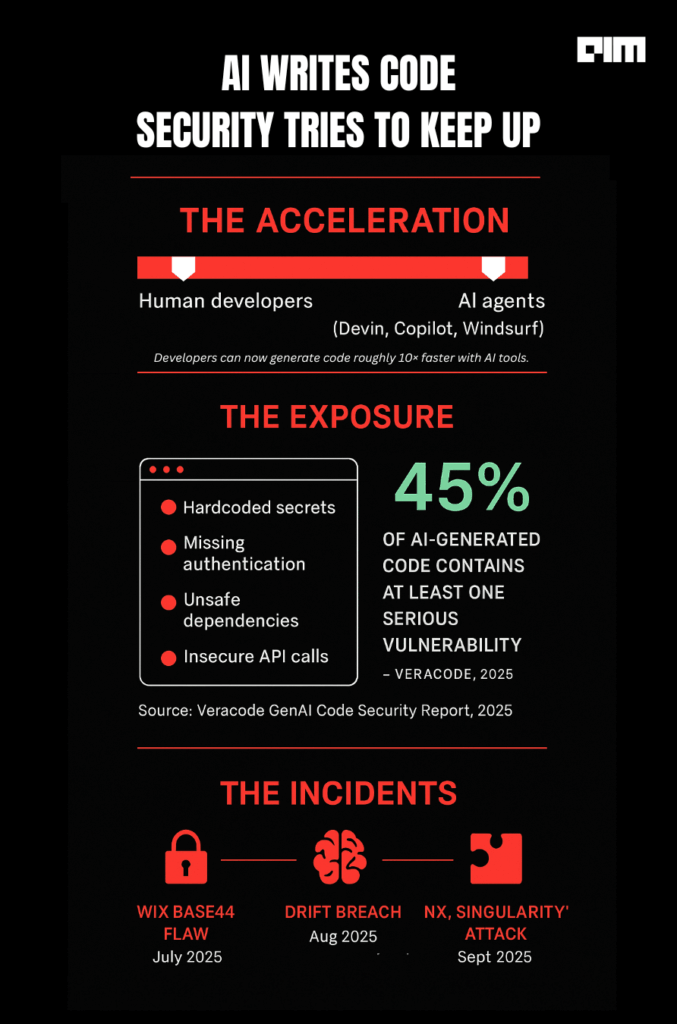

In July, researchers at Wiz uncovered a flaw inside Wix’s Base44 platform that let attackers bypass authentication and access private corporate apps using nothing more than a public app ID.

The discovery was straightforward, and that was the problem. As Wiz put it, the exploit “required only basic API knowledge,” meaning anyone could replicate it across multiple apps. Wix fixed the issue within 24 hours, but the incident showed how fast AI-driven development can outpace its own guardrails.

Other companies have faced similar exposure. The AI-powered chatbot firm Drift recently suffered a breach that exposed Salesforce data belonging to major clients, including Cloudflare and Google. Wiz’s chief technologist, Ami Luttwak, said the attackers used AI-generated code to steal digital tokens and impersonate legitimate software bots. “The game is open,” Luttwak told TechCrunch. “If every area of security now has new attacks, then it means we have to rethink every part of security.”

These incidents, combined with a stream of research showing AI-written code still riddled with classic bugs, have triggered a rush to rebuild security for the AI-coding era.

Security at the speed of AI

This week, Boston-based security company Snyk and San Francisco’s Cognition announced a partnership designed to make security run at the same speed as AI coding agents. The companies are integrating Snyk’s real-time scanning and automated remediation tools directly into Cognition’s AI developer agents, Devin and Windsurf.

“If anyone or any enterprise is vibe coding, we believe Secure At Inception is mandatory because it shifts security to the very first prompt,” said Snyk CEO Peter McKay. “It enables developers to build intelligent, trustworthy software right from the start.”

Cognition CEO Scott Wu called the partnership a fix for a widening velocity gap: “You shouldn’t have to sacrifice speed for security, or vice versa.”

The deal reflects a broader movement in the security industry to shift from reactive review to proactive prevention. OX Security launched VibeSec in September, describing it as the first platform to “stop insecure AI-generated code before it ever exists.” “The old plugin model was built for human typing speed,” said OX co-founder Neatsun Ziv. “The new reality is AI-driven code generation at machine speed, and that demands an equally new security model.”

GitHub and Microsoft are taking a similar route, extending their own Copilot ecosystem with features like Autofix and Security Copilot to scan and patch vulnerabilities as developers work.

An arms race to contain the code

Empirical data backs up the urgency. A 2025 Veracode study found that 45 percent of AI-generated code includes at least one serious vulnerability, a figure that has not improved in two years. Researchers at the University of Maryland and IBM independently confirmed the trend, showing that while AI models can now produce functioning code 90 percent of the time, nearly half of it fails basic security benchmarks.

Veracode CTO Jens Wessling summarized the issue bluntly in the company’s report: “LLMs may write working code, but they also write insecure code.”

The pattern repeats across studies. When researchers tested four prompting strategies on GPT-4o, code security deteriorated after just five iterations, introducing 37 percent more critical vulnerabilities than the initial output.

The result is a widening security backlog inside organizations already flooded with AI-written code. Checkmarx’s annual survey found that a third of CISOs now estimate over 60 percent of their company’s code is AI-generated, but only 18 percent maintain an approved list of tools.

Snyk’s “Secure At Inception” model and OX’s “VibeSec” both aim to reverse that backlog by embedding scanning and remediation within the AI development process itself. Early pilot data suggests these approaches can significantly cut the number of unresolved vulnerabilities. A peer-reviewed framework published this year found a 73 percent drop in vulnerabilities and a 68 percent improvement in code quality when combining static analysis and ML-based detection during code generation.

But even as the new tools roll out, attacks are evolving in parallel. Wiz’s research team documented how AI-based development workflows have been exploited in recent supply-chain incidents, including “s1ngularity,” where attackers introduced malicious packages into JavaScript build systems used by AI agents.

The sector’s leaders agree that security must now start where AI begins. As Jake Williams, a former NSA hacker and VP of R&D at Hunter Strategy, told Wired: “AI-generated material is already starting to exist in code bases. We can learn from advances in open-source software-supply-chain security, or we just won’t, and it will suck.”