“Immediate ROI with zero-implementation effort: Simply flip a switch to enable state-aware orchestration… total impact: 29%+ reduction in annual dbt-related compute costs,” reads the announcement on the dbt blog.

That claim offers a fresh data point in an ongoing cost battle. Big cloud providers push new chips, new GPUs, new data centers. But dbt’s announcement suggests a different path: cheaper AI might come through smarter software, not just faster hardware.

Hardware gains, and their limits

The cloud giants continue to bet on silicon. In an interview with The Verge, AWS CEO Matt Garman described AWS investments in custom AI chips and infrastructure while insisting software must keep pace: “We refuse to choose between hardware and software… you have to do both.”

These bets make sense. A new chip or accelerator can deliver a big jump in throughput or energy efficiency. But gains from hardware are discrete. You await a new generation, invest capital, and face constraints of fabrication, energy, and cooling.

Meanwhile, many inefficiencies lie elsewhere. Pipelines may recompute work unnecessarily. Inference may run full model layers when they’re not needed. Data transformations may repeat identical operations. These costs occur even with the latest GPU.

In quantization research, Rescaling-Aware Training for Efficient Deployment of Deep Learning Models on Full-Integer Hardware (Oct 2025) proposes calibrating rescale factors to reduce integer rescaling cost without accuracy loss. Another paper, Quantization Hurts Reasoning? (Apr 2025), shows that over-aggressive quantization can degrade models in reasoning tasks.

Those works underscore a point: algorithmic refinement can squeeze saved cycles out of existing hardware. The hardware gives you headroom; software fills it.

Cutting costs in code

dbt’s state-aware orchestration is already live in preview. It ensures only models whose inputs change are recomputed. That alone yields around 10 % savings, per the company’s blog post. Tuning freshness windows and skipping tests add further reductions, contributing to a total claimed 29 %+ compute drop.

Tristan Handy, CEO of dbt Labs, emphasized innovation around cost. In a March 2025 interview, he said: “Going forward… fundamental innovation around cost optimization and next-generation capabilities” would be a priority.

Software improvements like quantization, pruning, and conditional compute are becoming more mature. A model might skip layers or reduce bit width depending on input complexity. That approach means not every inference is full cost. It also means gains stack: skip work + quantize + reuse.

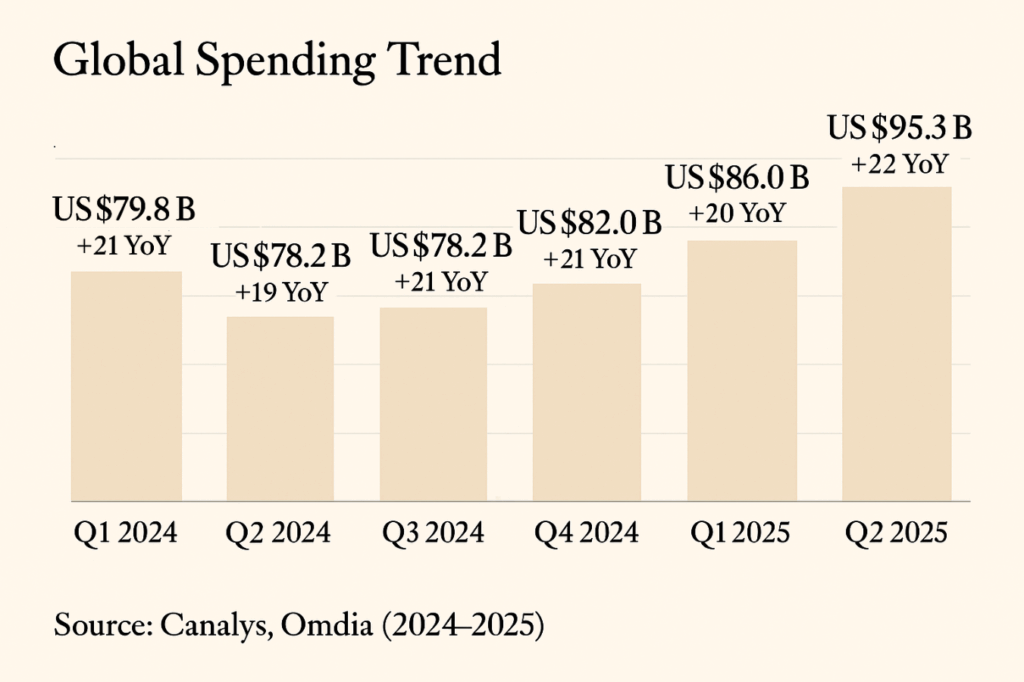

The a16z white paper LLMflation tracks how inference cost has fallen roughly 10× per year since 2022. It attributes much of that to improvements in model architecture, compiler optimizations, and runtime efficiency rather than raw hardware leaps.

More broadly, as models scale and usage patterns diversify, the fraction of cost that derives from waste or inefficiency rises. Smart orchestration and pipeline hygiene address that direct waste.

Still, software methods have boundaries. Pushing quantization too far may reduce answer fidelity in critical tasks. Some techniques require kernel or hardware support to be fully effective. Diminishing returns set in; the first 20-30 % is easier than the next few points. Engineering resource and complexity overhead must be balanced.

Even so, software is the mode where most teams can act. You don’t need to build a new chip to try it. You can run experiments, measure differences, and iterate.

In practice, teams should track cost per inference or compute per query. They should test quantization in safe workloads. They should invest in runtime libraries and infrastructure support. They should couple software approaches with disciplined deployment: caching, batching, dependency tracking.

The outcome of that effort is becoming a reality. dbt says for their users enabling state-aware orchestration, the outcome is measurable: fewer runs, fewer compute hours, lower cloud bills.

Hardware will never cease to matter. It gives you the foundation to scale. But for now, the highest-leverage frontier in reducing AI compute cost lies in software. That is where teams can extract real, repeatable savings.