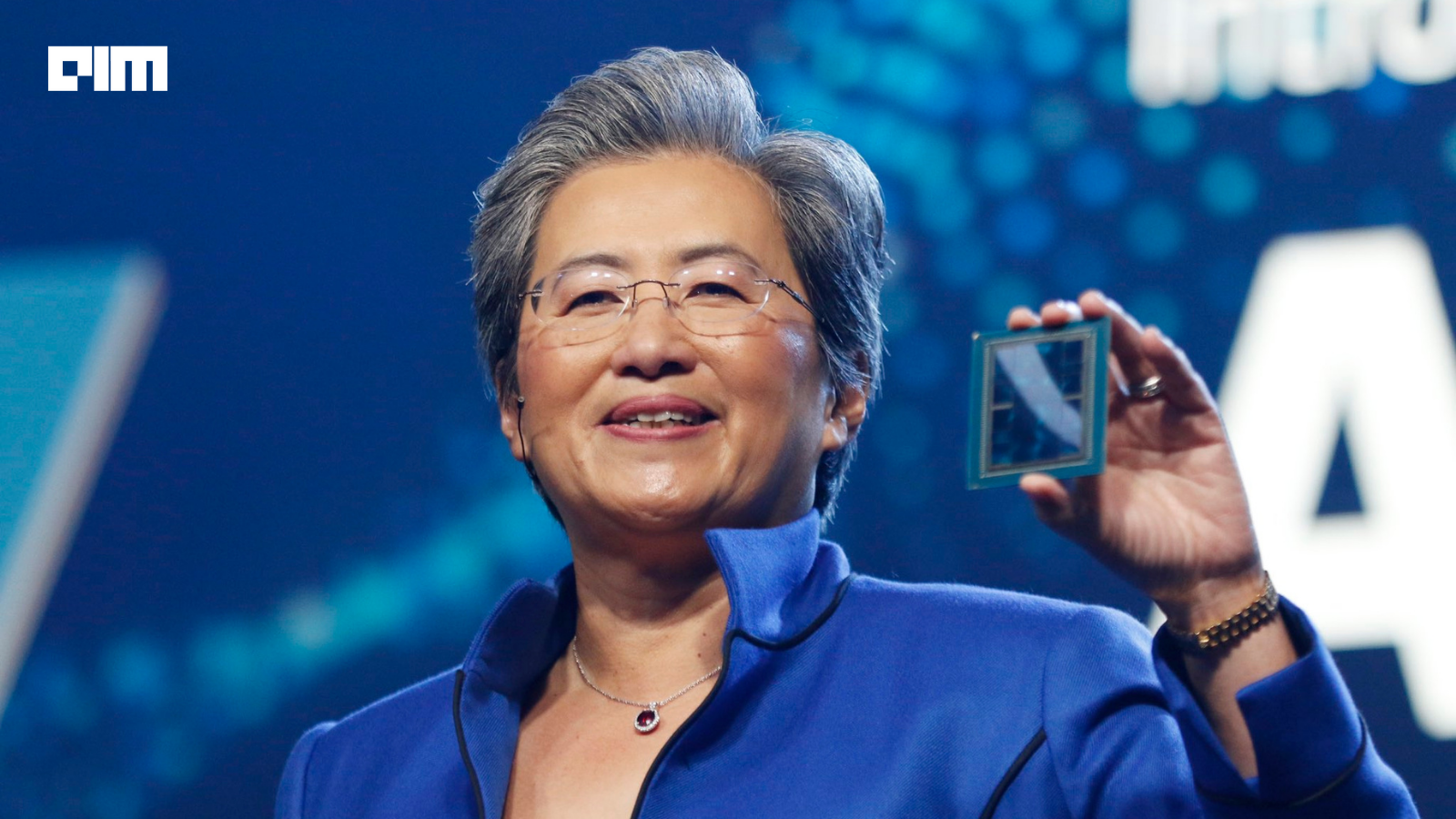

Advanced Micro Devices made a bold statement about its AI future this week. At its first analyst day in three years, CEO Lisa Su declared that AMD expects the data center chip market to reach $1 trillion by 2030, and positioned her company to capture a significant share of it.

More aggressively, AMD projects its earnings will triple, reaching $20 per share within the next three to five years, while revenue grows 35% annually across the entire business and 60% specifically in data center operations.

The announcement reflects a dramatic shift in AMD’s competitive posture. For years, the chipmaker has been the distant second to Nvidia in AI accelerators, watching its rival capture a massive share of the generative AI boom.

Now, with a transformative OpenAI deal in hand and a roadmap of next-generation products, AMD is signaling that the era of Nvidia’s unchallenged dominance may be narrowing.

The foundation for AMD’s bullish outlook was laid six weeks ago. In October, AMD signed a multiyear deal with OpenAI worth tens of billions in annual revenue. The arrangement includes an unusual component.

OpenAI received warrants to purchase up to 10% of AMD’s stock, structured to vest as shipment milestones are achieved. This isn’t just a supply contract, it’s a vote of confidence that reverberated through the semiconductor industry.

That confidence is reflected in AMD’s stock performance. Since the OpenAI deal announcement on October 6, AMD shares have risen 16%. The company’s stock has effectively doubled year-to-date, driven largely by successive announcements of major AI customer wins and the growing perception that AMD has finally cracked the code on competing with Nvidia at scale.

AMD’s finance chief Jean Hu quantified the OpenAI contribution stating that the deal alone is expected to generate tens of billions in revenue over four years. Yet that’s only part of the story.

AMD has simultaneously established partnerships with Oracle and the U.S. Department of Energy, signaling that institutional AI infrastructure buyers are increasingly comfortable diversifying away from sole-source Nvidia.

Projections and Roadmap

AMD’s growth targets are aggressive by any measure. The company expects data center revenue to grow 60% annually through 2030. To reach $100 billion in annual data center revenue within five years, AMD needs to roughly triple this line item from current levels. The company also projects a staggering 80% annual growth rate specifically in AI data center revenue.

For context, Nvidia CEO Jensen Huang has painted an even bigger picture. He believes the broader AI infrastructure market will reach $3 trillion to $4 trillion by 2030. AMD is claiming $1 trillion of addressable opportunity in its own data center chip category, which includes CPUs, networking chips, and AI accelerators. The math suggests AMD isn’t competing for the entire market, it’s targeting a specific layer.

The company has precedent for executing against ambitious targets. AMD has steadily taken share from Intel in CPUs for years, moving from single-digit market share to nearly 50% in recent quarters. Su highlighted this track record: “AMD has never been better positioned,” she said at analyst day.

What makes AMD’s forecast credible isn’t just the OpenAI deal or historical wins against Intel. It’s the company’s product roadmap. The next-generation MI400 series of AI chips is set to launch in 2026 with multiple variants targeting both scientific applications and generative AI.

AMD is also building a complete server rack system directly comparable to Nvidia’s GB200 NVL72 offering. This signals that AMD is building a systems approach to AI infrastructure.

The MI400 represents a leap forward. Early benchmarks and customer feedback on the predecessor MI350 have been positive, with Oracle already deploying AMD chips at scale. The company’s Instinct GPUs are now used by seven of the world’s top 10 AI companies.

Building an Ecosystem

Beyond hardware, Su emphasized that AMD has “built an M&A machine.” The company has acquired ZT Systems (a server builder), MK1 (an AI software company), and several other software startups focused on the tools needed to run AI applications. Chief Strategy Officer Mat Hein signaled the strategy: “We’ll continue to do AI software tuck-ins.”

This acquisition approach addresses a critical weakness AMD has historically faced against Nvidia. Nvidia’s CUDA ecosystem and software tooling have been the real competitive moat, not just the chips themselves. By acquiring companies that build the middleware, frameworks, and infrastructure that makes AI deployment easier, AMD is attempting to narrow that advantage.

Despite the bullish projections, Nvidia still dominates. The company controls over 90% of the high-end AI accelerator market. Investors and analysts have grown skeptical of claims that AMD can significantly erode this position, not because AMD’s chips aren’t competitive, but because switching costs, software ecosystem lock-in, and customer inertia create a powerful incumbent advantage.

Yet the OpenAI partnership, the MI400 roadmap, and AMD’s demonstrated ability to win market share in CPUs suggests this isn’t bluster. OpenAI and other large AI infrastructure buyers have sufficient scale and leverage to require alternatives to Nvidia pricing and availability. AMD is betting it can be that alternative.

AMD’s analyst day projections will be tested on three fronts. First, whether the MI400 series delivers performance gains sufficient to justify customer migrations. Second, whether AMD can scale production to meet demand without repeating historical capacity constraints. And third, whether software ecosystems mature fast enough to make AMD chips as attractive as Nvidia’s.

The $1 trillion market forecast and tripled earnings target aren’t inevitable. But they’re no longer delusional. AMD has spent years building credibility in data centers. Now, with products, partnerships, and capital alignment, it’s positioning itself as the legitimate alternative in the trillion-dollar AI infrastructure market.